AI Washing: AI or not AI, That is the Question

What is AI Washing? How bad is it? How can you prove it, and what can companies do about it? "AI Washing" is a company's marketing effort to advertise that their products or services contain artificial intelligence, even though this is only weakly…

Where is Predictive Analytics used?

Surprising ways predictive analytics is used. Predictive analytics isn’t new. In fact, there’s a very old story about target loyalty and number crunching that’s now the stuff of textbooks. The possibilities offered by AI models have also…

What we can learn from the most popular AI scandals

With AI front and centre in our collective consciousness, it’s no surprise that it has also had its fair share of controversy. AI scandals are not that numerous (yet), but they are impactful. So, today we’ll look at some famous AI scandals…

Study: Automated, Artificial Intelligence (AI)-based pricing versus Human-based pricing in B2B

To further explore the potential of automating the B2B salesperson’s pricing decisions. You'll learn the results of a field experiment conducted by Yael Karlinsky-Shichor (School of Business at Northeastern) and Oded Netzer (Columbia…

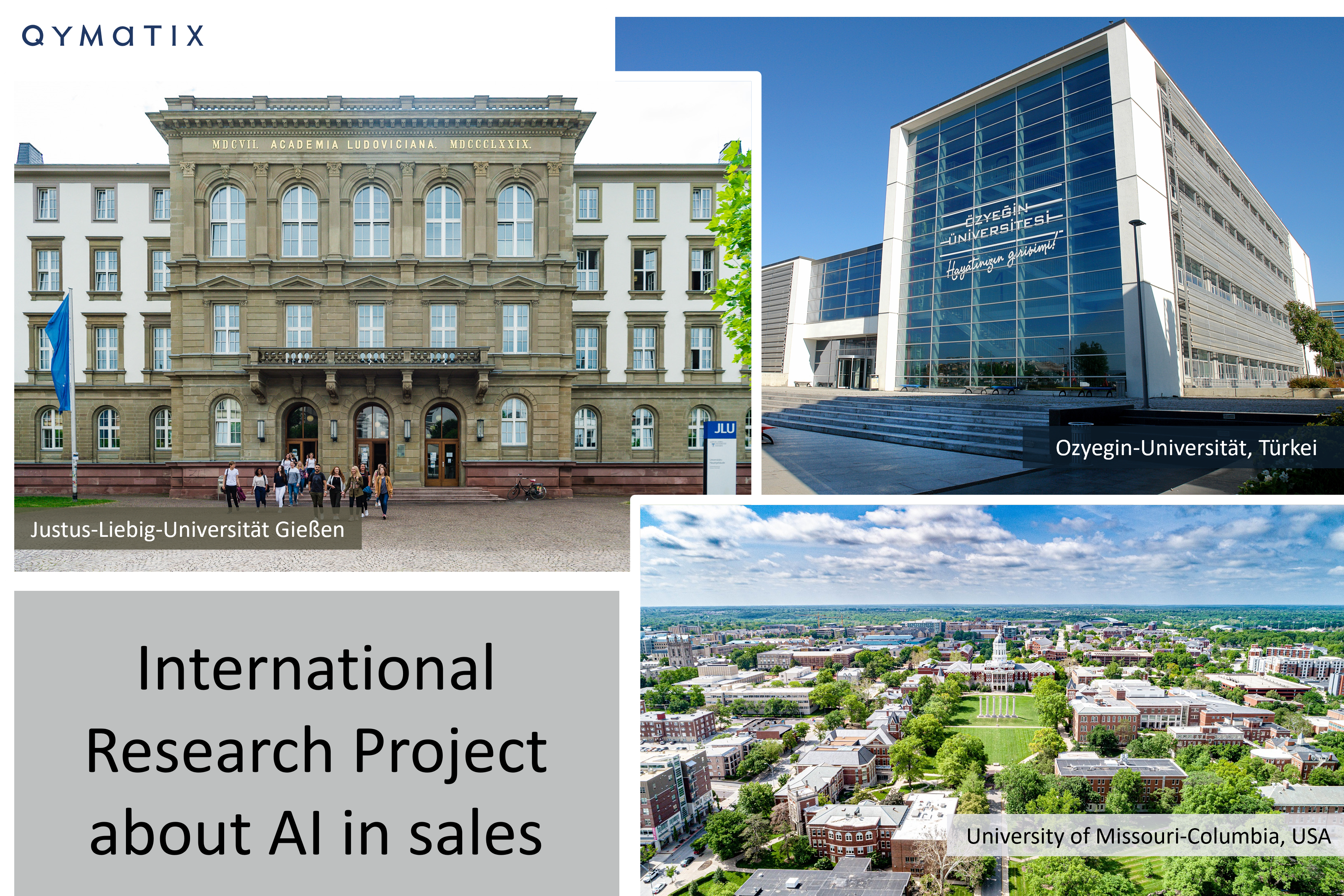

Qymatix supports international research project in sales and artificial intelligence

Karlsruhe, 18.11.2021. Qymatix supports an exciting international research project: Three researchers from three different universities have joined forces for a joint research project: "Success in sales through the use of artificial intelligence…